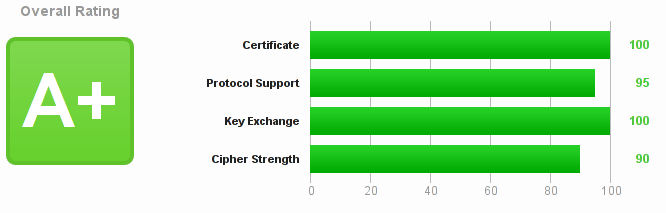

In the light of the latest Internet espionage and surveillance revelations, I started to investigate web server security more closely. As a result I came up with a slightly over the top setup that maximizes security and scores a solid A+ rating at SSL Lab

Note: Cipher strength score could be increased to 100 if using only 256 bit encryption, but that would mean eliminating Java7 support

Recently, SSL Labs updated security rating criteria in their server testing tool, so it has become a bit more difficult to achieve such a high score.

Getting an A(+) rating is more than a matter of nerd proud. Rather, It indicates that a respective site tries to effectively deal with known security exploits and attack vectors.

Design philosophy and goals

The goal is to prevent eavesdropping and man-in-the-middle attacks. We want to make it as difficult as possible to decrypt or hijack communication between server and client. As the client’s integrity is outside of our control, we must make sure at least the server-side is configured appropriately.

If using an appropriate client, we strive for providing the highest possible level of confidentiality, integrity and trust, that data exchanged between systems cannot be intercepted or modified, nor that sensitive information is disclosed.

Key highlights include:

- TLS is the only supported protocol

- Encryption with 128 bits or stronger

- Only use cipher suites without known vulnerabilities 1

- Ignore clients with insufficient capabilities

The guys at takeitapart.com achieved the highest test score despite making amends in regard to protocol choice, client support and forward secrecy.

Personally, I do not care for Windows XP / Internet Explorer 6-8 support anymore and from a security point of view nobody should be using these anyway.

Checklist

A lot of inspiration came from reading Mozilla’s server-side wiki and Julien Vehent’s SSL guide. Both provide a lot of detailed information.

I am not going to point out how to compile nginx manually. Nonetheless, it may be worthwhile to do so if you are running a rather outdated software stack. Some stronger cipher suites are only available in newer versions of OpenSSL. Server Name Indication (SNI) is another compelling reason to update when hosting several HTTPS sites on the same IP address.

Strong private key and trusted certificate

As almost no CA offers support for ECDSA right now, there is no other option than using high grade RSA keys. Using 2048 bit strength is considered good practice.

Besides a strong private key, choose a Certificate Authority (CA) with good track record and acceptance. For starters and low budgets: StartSSL offers free (as in beer) class 1 certificates you’ll have to renew every 12 month.

High grade protocols and cipher suites

As virtually all modern clients support TLS, support for SSL protocol is dropped entirely. Furthermore only ciphers with Forward Secrecy will be used.

To increase the security when falling back to Diffie-Hellman based key exchange, we’ll increase the size of the shared secret (which defaults to 1024 bits):

$ openssl dhparam -rand /dev/urandom -out /etc/nginx/dhparam.pem 4096

Please note: This breaks compatibility with Java 6.

Forcing HTTPS only

Website access via HTTP is redirected to HTTPS with a cachabe 301-response.

The Strict Transport Security HTTP header instructs clients to make future requests over HTTPS only. Be careful when specifying ‘includeSubdomains’ as there is no way to revert it in a timely manner if maxage is set very high.

Make sure to set the httponly and secure flags on all cookies. Otherwise you’re vulnerable to several vulnerabilities at the application level.

For more details on this see https://en.wikipedia.org/wiki/HTTP_cookie#Secure_cookie.

Performance tweaks

Increase number of workers

By setting workers=auto, nginx will fork as many workers as CPU cores are available. Thereby we can mitigate computational overhead and spread the load across all available cpus. If you have more than 8 cores available you may want to hardcode this to a lower value instead. Running too many workers can have diminishing returns.

Caching SSL sessions

Holding all established ssl sessions in a shared cache (accessible to all workers) greatly improves performance of subsequent requests. In addition we’ll set a relatively high keep-alive timeout so multiple requests can re-use the same connection. The latter setting is most effective when serving lots of static assets for each page view.

OCSP stapling

OCSP stapling saves a round trip to the Certificate Authority when checking for certificate revocation lists (CRL). Enabling stapling is recommended if dealing with mobile clients as every additional request hurts.

I noticed odd behavior with OCSP, where stapling responses were sent only after a couple of https requests had been handled already.

You can check whether stapling works using openssl: openssl s_client -connect <hostname>:443 -tls1 -tlsextdebug -status

SPDY support

The SPDY protocol, which can help reducing latency when loading pages, is supported in most modern browsers.

nginx configuration

This setup should be a good starting point:

http {

# mitigate extra cpu costs by running as many workers as cpu-cores are available

worker_processes auto;

# ... remaining config ...

# redirect http traffic to https

server {

listen 80;

server_name .example.org;

# 1 year cachable 301 redirect

add_header "Cache-Control" "public, max-age=31536000";

rewrite ^ https://example.org$uri permanent;

}

# secured host

server {

# enable spdy for modern clients

listen 443 ssl spdy;

server_name example.org;

root /path/to/webroot;

# loads certificate and private key

ssl_certificate /path/to/certificate;

ssl_certificate_key /path/to/private-key;

# strongest ciphers first, explicitly blacklisting ciphers

ssl_ciphers 'ECDHE-RSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-SHA384:ECDHE-RSA-AES256-SHA:DHE-RSA-AES256-GCM-SHA384:DHE-RSA-AES256-SHA256:DHE-RSA-AES256-SHA:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-SHA256:ECDHE-RSA-AES128-SHA:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES128-SHA256:DHE-RSA-AES128-SHA:!aNULL:!eNULL:!EXPORT:!CAMELLIA:!DES:!MD5:!PSK:!RC4';

# prefer above order of ciphers

ssl_prefer_server_ciphers on;

# tls only

ssl_protocols TLSv1 TLSv1.1 TLSv1.2;

# using more than 1024 bits will break java 6 compatibility ...

# ssl_dhparam /etc/nginx/dhparam.pem;

# enables ocsp stapling

# ssl_trusted_certificate /path/to/trust.pem;

# ssl_stapling on;

# ssl_stapling_verify on;

# resolver 208.67.222.222 208.67.220.220 valid=300s; # opendns

# resolver_timeout 15s;

# performance optimization

ssl_session_cache shared:SSL:10m;

ssl_session_timeout 10m;

keepalive_timeout 70s;

# enforce https for 1 year - no more self-signed certificates

add_header "Strict-Transport-Security" "max-age=31536000; includeSubDomains";

# your vhost/backend settings

location / {

# ...

}

}

}

Optional settings are commented out, because they require additional manual actions outlined above. All directives assume you’re using a recent version of nginx (1.4+) and OpenSSL (1.0.1+).

If in doubt how to fill out the blanks, you may want to check out more complete nginx configuration examples.

Next steps

I’ll probably have to monitor the performance implications. Encrypting traffic has a tremendous impact on throughput and latency. Especially the initial handshake consumes large amounts of CPU time. Average response times have more than doubled compared to traffic over HTTP. While the additional overhead is barely noticeable on a desktop, I noticed an abysmal mobile experience.

Overall I’m quite happy with the results, though. In the next couple of weeks I’ll experiment a bit more with various settings to find the best compromise between performance and security. After all, everything needs to scale these days ;)

Recommended reading

- BEAST attacks are not mitigated server-side, instead responsibility is pushed to the client. This permits getting rid of RC4. ↩